Slave’s specified contribution to Master

Today’s article is to find out in Hadoop cluster how to contribute limited/specific amount of storage as slave to the cluster??

Sounds interesting.. Let’s begin…

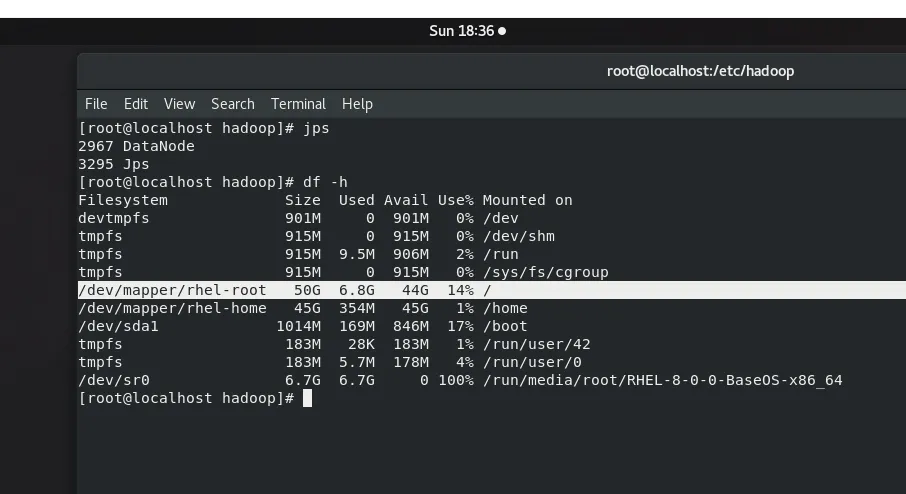

This is one of my data node & this has 50 GB in its main drive i.e., ‘ / ’ drive and it contributes it entire 50 GB to the name node.

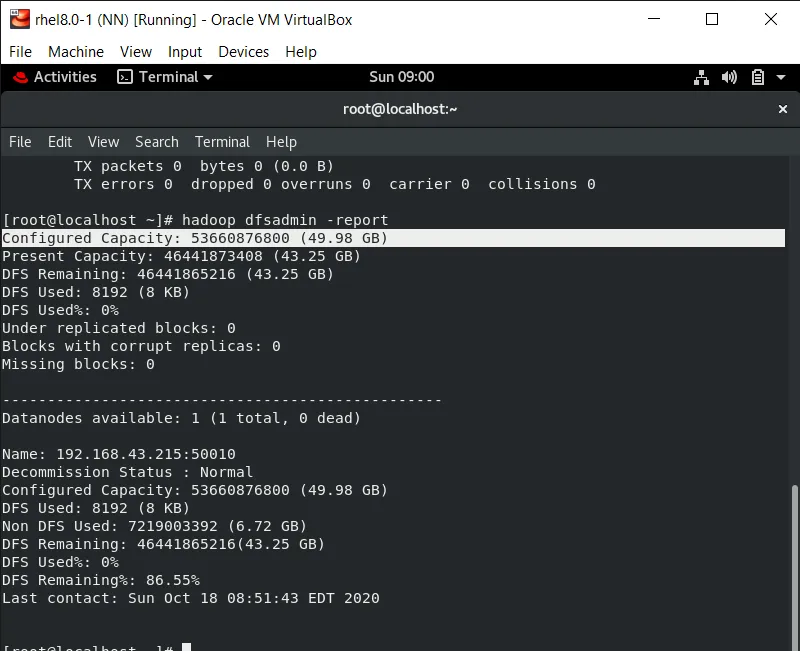

This is my name node & here you can see my data node has contributed entire 50 GB storage to the name node.

Now, let’s find how to contribute limited/specific amount of storage as slave to the cluster ??

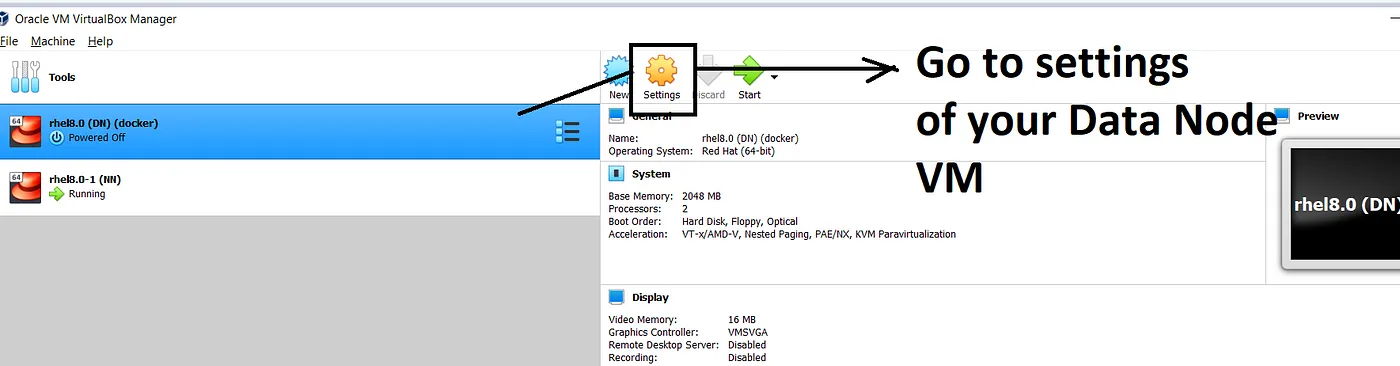

Step 1 : Shut down your data node

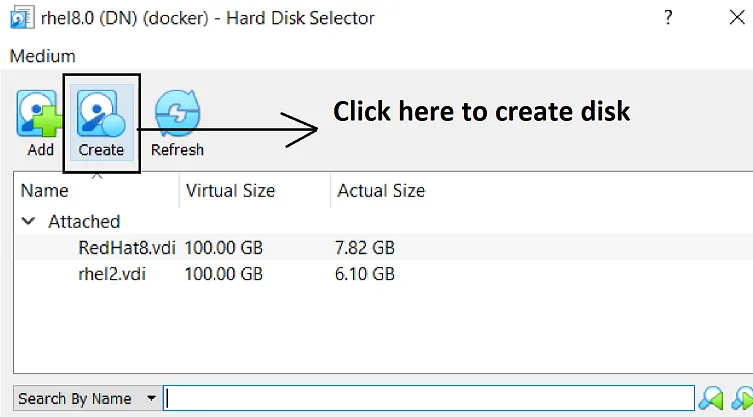

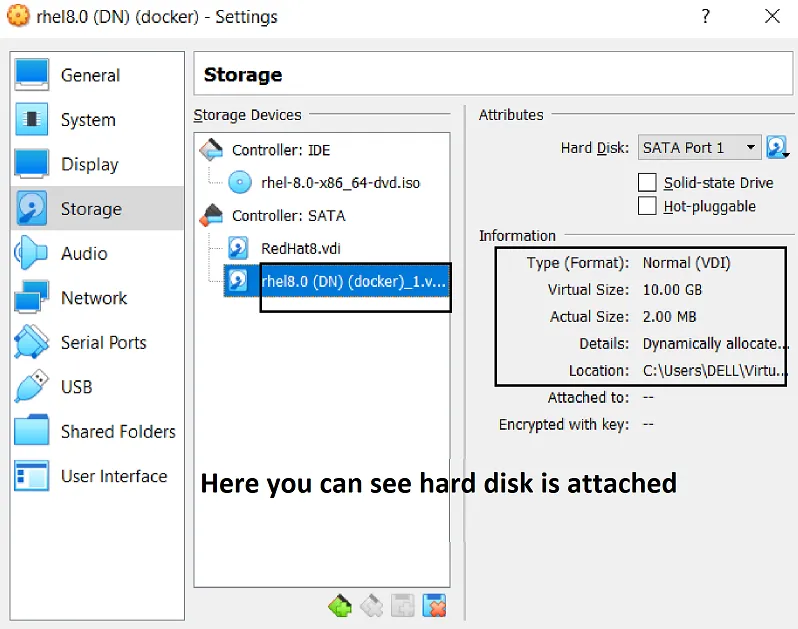

Step 2 : Go to settings

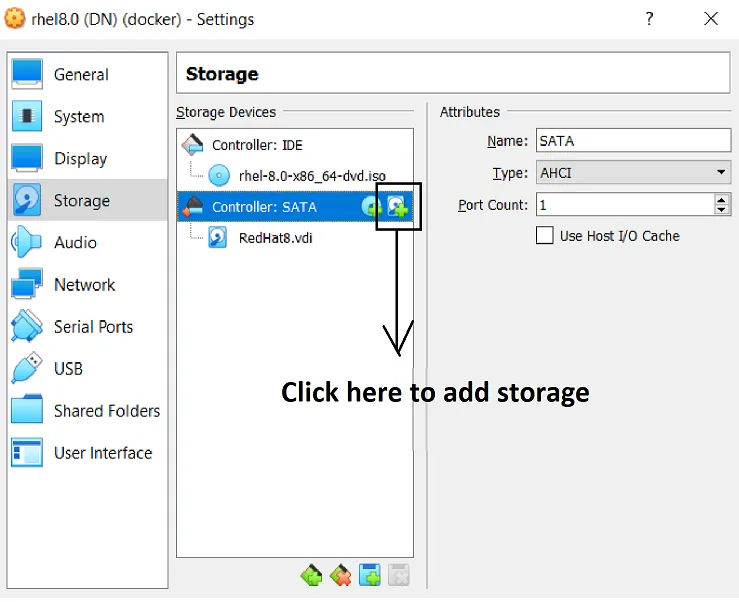

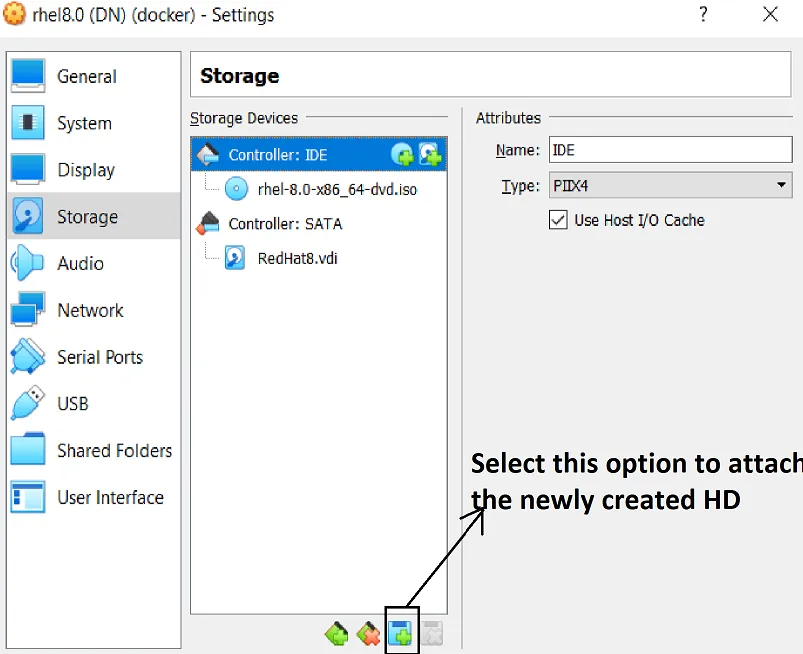

Step 3 : In Settings, go to storage section

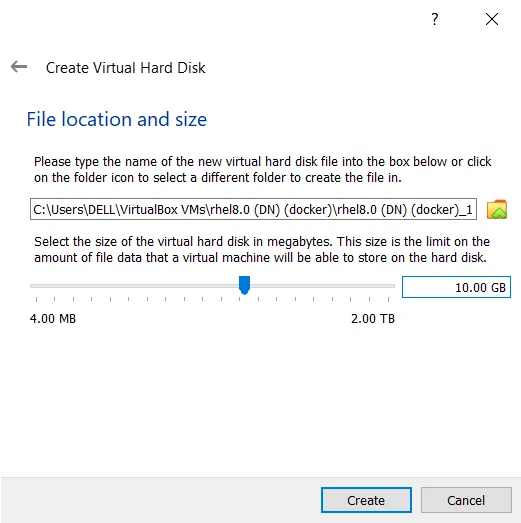

Now after these, choose default options as below…

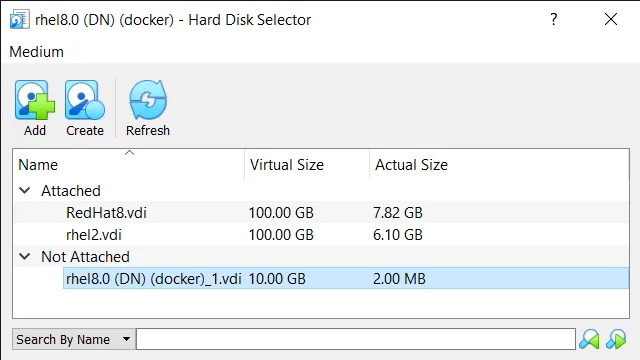

Now, we have created the new hard disk we need to attach it

Now, start your VM..

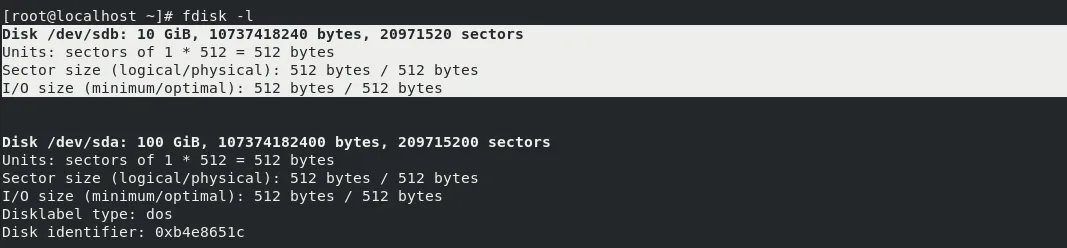

Now, in your VM you can see the new HD by running command :

fdisk -l

Now to create hard disk for our use we have to follow below steps :

Step 1 : Check how must space you need & then create partition as below.

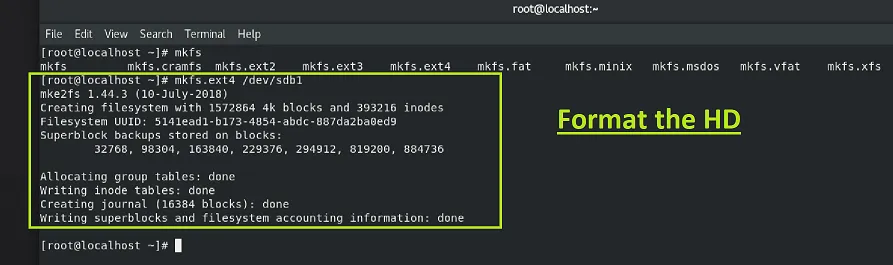

Step 2 : Format the Hard Disk

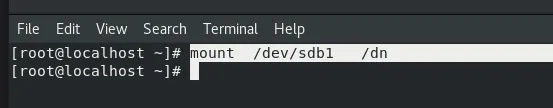

Step 3 : Mount the HD. We are mounting it with that folder which we want to share to the name node

Now we can start our name node & data node & let’s see what happens…

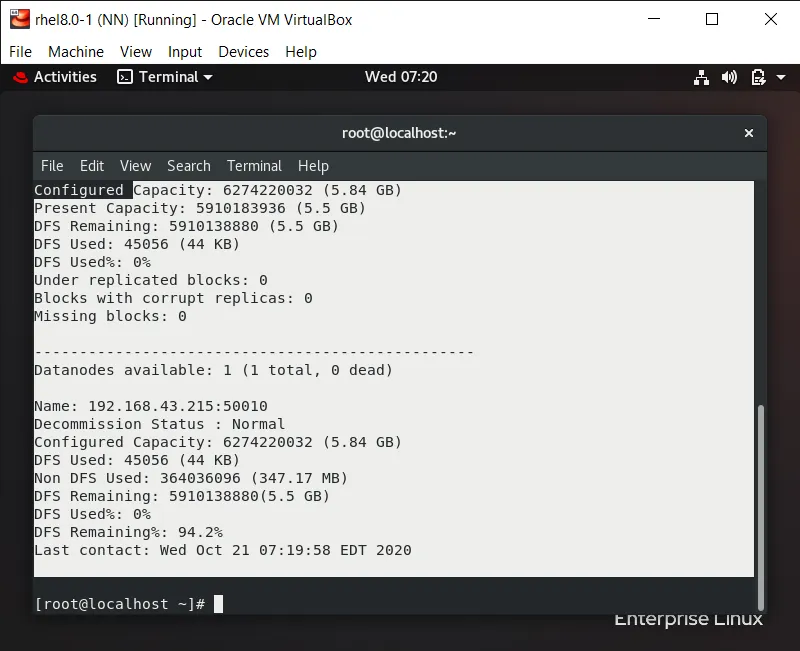

And here you can see it’s only contributing limited space to name node.

We can also perform this on AWS instance by adding EBS volume of particular size and performing the same partition steps.

...

Further Reading

- The Lazy Solution! 02.May.2025

- Debugging Pandas Unexpected Type Conversion 07.Feb.2025

- Part-2 More about Docker 27.Feb.2023

...